The Hidden Power of Repeated Mistakes

Today’s featured startup is tackling education’s biggest time sink: grading.

Project Overview

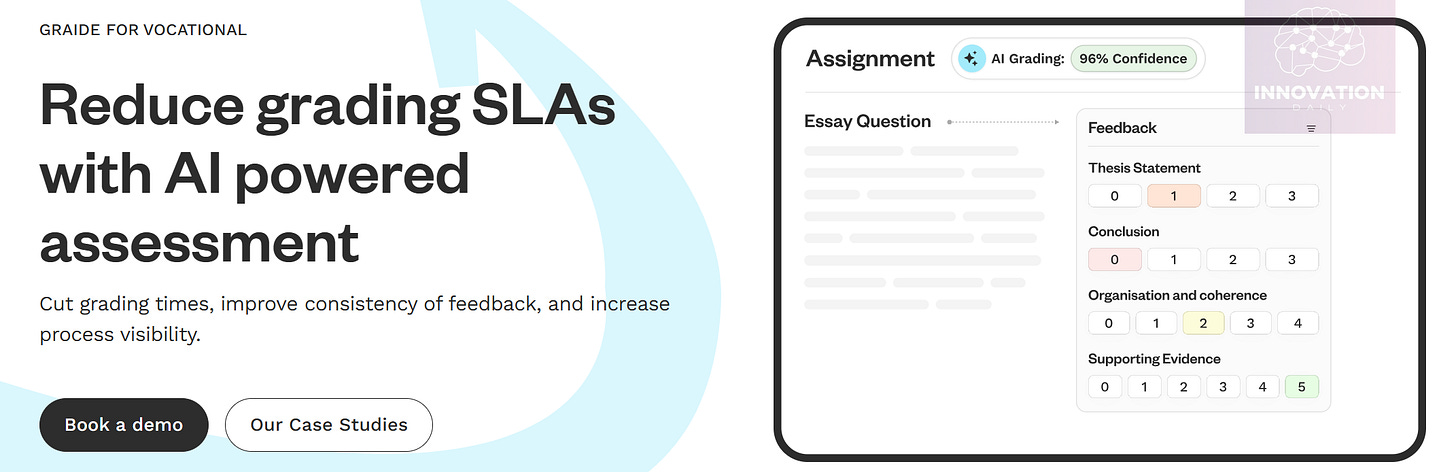

Graide is a UK-based platform with an embedded AI engine that helps educators assess student work — from homework to exams — with precision and speed.

It’s designed for universities, exam boards, and professional training programs, and it supports grading in subjects like math, physics, biology, chemistry, economics, and engineering.

Recently, Graide added essay grading to its toolbox — and in pilot tests, the AI’s grades matched those of human teachers with 99% accuracy. But the platform doesn’t just assign grades; it also offers personalized feedback, pointing out errors and suggesting simpler or more standard solutions.

A standout feature: Graide can interpret handwritten formulas, making it especially useful for math and physics assignments.

The startup was founded in 2021 by a group of former teaching assistants who were overwhelmed by the tedious task of manually grading assignments. Tired of the repetition, they built Graide to automate the process.

The platform is already being used by several British universities and is now running pilot programs at institutions in the U.S. Graide recently raised £1.7 million, bringing total funding to £2.5 million.

What’s the Gist?

Sure, in theory, anyone could plug a student’s assignment into ChatGPT and ask it to evaluate it. But Graide is much more than a one-shot AI prompt.

The platform takes a more thoughtful approach. When it receives a student’s work, the AI first understands what’s written. Then it checks its internal database of past feedback given by real instructors on similar topics:

If a near-identical answer has been graded before, Graide auto-generates a response based on that previous feedback.

If the answer partially overlaps with past work, it drafts partial feedback and passes it to the instructor for review and completion.

If it’s something entirely new, the AI hands off the task to the teacher — who writes the feedback manually. That new feedback is then added to the AI’s database for future use.

Educators can adjust the "similarity threshold" manually to control how much of the grading the AI handles.

Over time, as the AI learns from each reviewed submission, it becomes capable of handling more tasks on its own — automatically assessing the majority of student work.

This works because education is, by nature, cyclical. Teachers cover the same topics year after year, and students tend to make the same mistakes. Graide leverages this pattern to group similar assignments together, saving educators time. And when errors repeat, the AI can eventually take over much of the routine grading.

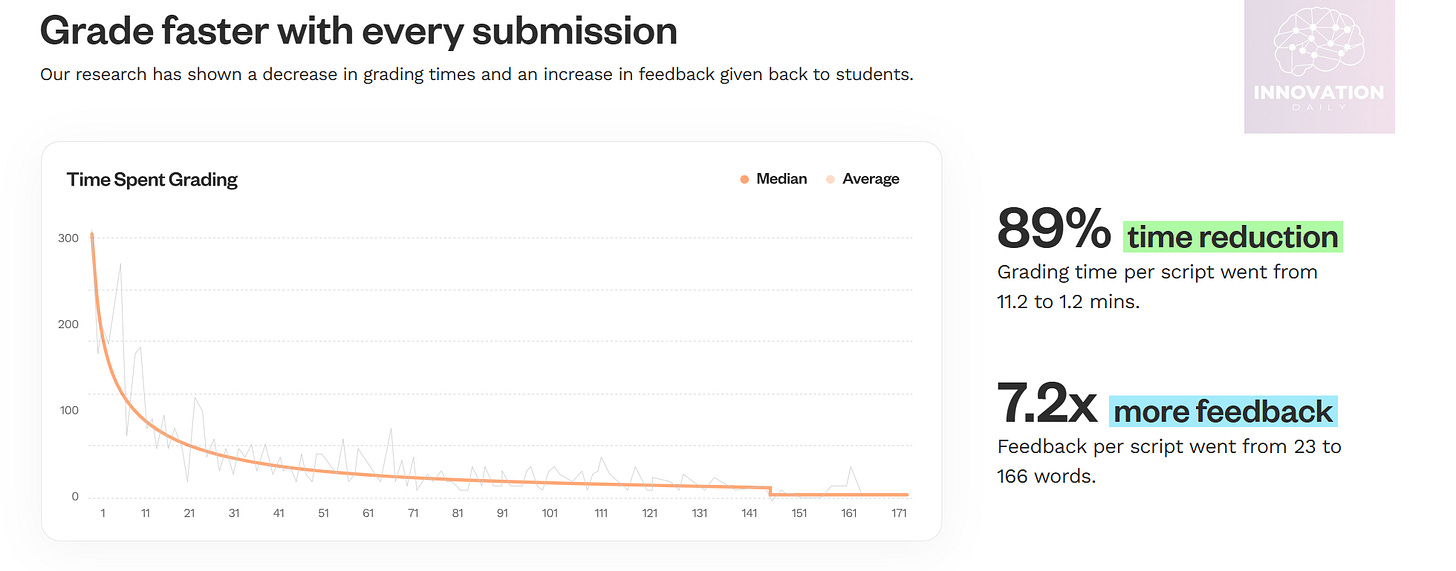

The results? A dramatic drop in grading time — from 11.2 minutes to just 2.8 minutes per assignment. Even more impressive: the average length of feedback increased from 23 words to 166 words. The AI does the heavy lifting, so teachers are no longer fatigued by writing the same comment dozens of times.

And for students, this means more detailed and helpful feedback—which leads to better understanding and learning.

Key Takeaways

Feedback is the cornerstone of effective learning. If it weren’t, we’d all be geniuses after watching a few online tutorials.

But high-quality feedback takes time — and time is the most limited resource a teacher has. The more feedback a student gets, the better their chances of learning. But scaling that feedback often means sacrificing quality or driving up costs.

This creates a core dilemma: either teachers burn out trying to give rich feedback to every student, or they scale back and let some learners fall through the cracks.

Generic AI tools don’t help much here. Good teachers all teach differently — and only bad ones stick rigidly to the textbook. What Graide understands is that the mistakes students make are often predictable. And that insight is the key to scaling meaningful feedback without sacrificing quality.

By identifying and responding to typical errors, platforms like Graide let educators scale up without scaling effort. Whether you’re teaching 10 students or 1,000, the time required for feedback doesn’t change much — because the error patterns stay the same.

This same approach powers Kyron Learning, a U.S. startup that raised $20.1 million. Their platform features interactive video lessons in school subjects. After each concept is taught, the system quizzes the student. If they get it right, it moves on. If not, it plays a pre-recorded explanation of that exact mistake — crafted by experienced educators who know the common pitfalls.

In both Kyron and Graide, the secret sauce isn’t AI itself—it’s the insight that most mistakes are predictable. By building platforms around those patterns, they automate high-quality feedback in ways that actually help students learn.

There’s plenty of room to build more tools like this. Whether in education or another domain, the principle remains powerful:

Automate feedback by learning from common human errors.

What’s another area where you could apply the same model?

Company info:

Graide

Website: graide.co.uk

Last round: £1.7M, 27.02.2024

Total investments: £2.5M, across 2 rounds